Globally, policymakers are debating governance approaches to regulate automated systems, especially in response to growing anxiety about unethical use of generative AI technologies such as ChatGPT and DALL-E. Legislators and regulators are understandably concerned with balancing the need to limit the most serious consequences of AI systems without stifling innovation with onerous government regulations. Fortunately, there is no need to start from scratch and reinvent the wheel.

As explained in the IEEE-USA article “ How Should We Regulate AI?,” the IEEE 1012 Standard for System, Software, and Hardware Verification and Validation already offers a road map for focusing regulation and other risk management actions.

Introduced in 1988, IEEE 1012 has a long history of practical use in critical environments. The standard applies to all software and hardware systems including those based on emerging generative AI technologies. IEEE 1012 is used to verify and validate many critical systems including medical tools, the U.S. Department of Defense’s weapons systems, and NASA’s manned space vehicles.

In discussions of AI risk management and regulation, many approaches are being considered. Some are based on specific technologies or application areas, while others consider the size of the company or its user base. There are approaches that either include low-risk systems in the same category as high-risk systems or leave gaps where regulations would not apply. Thus, it is understandable why a growing number of proposals for government regulation of AI systems are creating confusion.

Determining risk levels

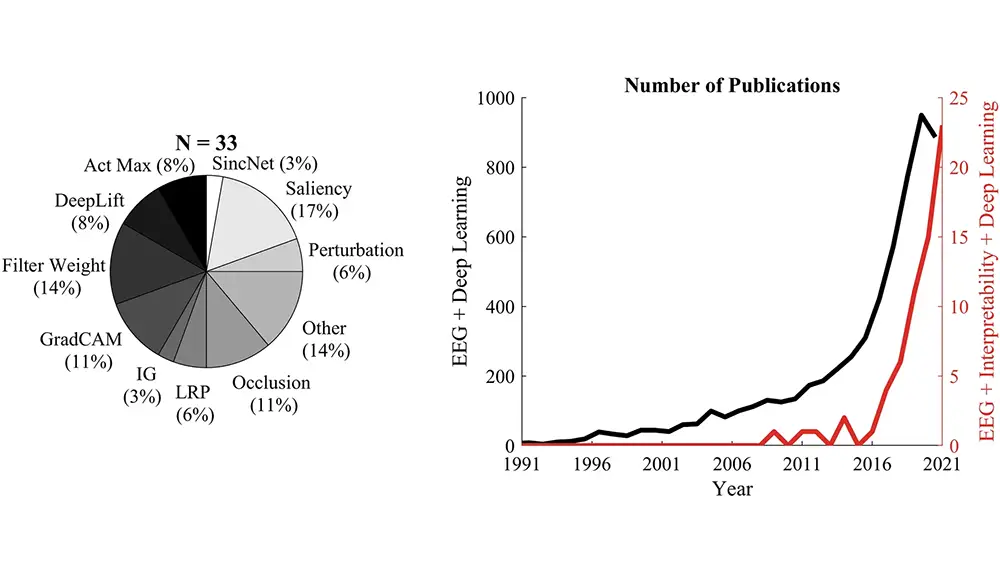

IEEE 1012 focuses risk management resources on the systems with the most risk, regardless of other factors. It does so by determining risk as a function of both the severity of consequences and their likelihood of occurring, and then it assigns the most intense levels of risk management to the highest-risk systems. The standard can distinguish, for example, between a facial recognition system used to unlock a cellphone (where the worst consequence might be relatively light) and a facial recognition system used to identify suspects in a criminal justice application (where the worst consequence could be severe).

IEEE 1012 presents a specific set of activities for the verification and validation (V&V) of any system, software, or hardware. The standard maps four levels of likelihood (reasonable, probable, occasional, infrequent) and the four levels of consequence (catastrophic, critical, marginal, negligible) to a set of four integrity levels (see Table 1). The intensity and depth of the activities varies based on how the system falls along a range of integrity levels (from 1 to 4). Systems at integrity level 1 have the lowest risks with the lightest V&V. Systems at integrity level 4 could have catastrophic consequences and warrant substantial risk management throughout the life of the system. Policymakers can follow a similar process to target regulatory requirements to AI applications with the most risk.

Leave a Reply